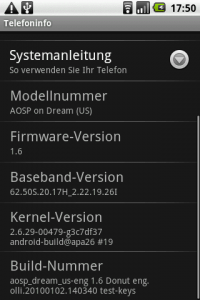

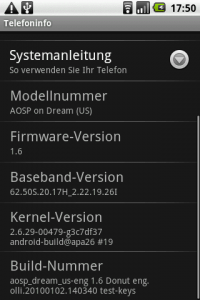

As Android 2.0 will probably be available for the G1/HTC Dream soon I decided to keep up with the times and update to Android 1.6. Testing different Donut releases such as cyanogenmod and the vanilla htc version frustrated me. Support for processing camera images is still rubbish because of permanent memory allocation and garbage collector runs. Thus, I decided to bake my own donut. The guide below is heavily based on Johan de Koning’s Building Android 1.5 series and the Building For Dream Or Sapphire documentation.

Collecting the tools

In order to build and deploy your own Android 1.6 you need:

- A G1 with Android 1.6 and a boot image with enabled fastboot

- A computer with a recent Ubuntu or Windows with > 14GB free hard disk and > 2GB RAM

Using Windows 7 I downloaded and installed VirtualBox 3.1.2. The virtual machine needs around 14GB hard disk and 2GB RAM. To install a Linux I downloaded Ubuntu 9.10 mounted the ISO image and installed Ubuntu inside VirtualBox. It’s also a good idea to install the VirtualBox Guest Additions and create a shared folder to exchange data with the host machine. My folders sharename is exchange and can be mounted by typing the following into a terminal:

sudo mount -t vboxsf exchange /mnt

Next step is to install all kinds of stuff to be able to download things conveniently and compile the system:

sudo apt-get install git-core gnupg flex bison gperf libsdl-dev libesd0-dev libwxgtk2.6-dev build-essential zip curl libncurses5-dev zlib1g-dev

We also need Java but some parts of the source tree are (still) not compatible with Java6 and Java5 is not available as a package for Ubuntu 9.10. Following the Enea guys I used packages from the previous Ubuntu version Jaunty Jackalope. You first have to add the Jaunty repositories to your source list by typing:

sudo gedit /etc/apt/sources.list

in a terminal and add the following lines:

deb http://us.archive.ubuntu.com/ubuntu/ jaunty multiverse

deb http://us.archive.ubuntu.com/ubuntu/ jaunty-updates multiverse

Afterwards Java5 can be installed by typing:

sudo apt-get update

sudo apt-get install sun-java5-jdk

We need two additional tools to proceed. We will create a bin folder in our in our home directory:

cd ~

mkdir bin

The first tool is repo. We download it and make it executable:

curl http://android.git.kernel.org/repo >~/bin/repo

chmod a+x ~/bin/repo

The second tool is unyaffs a program that extracts files from a yaffs file system image.

curl http://unyaffs.googlecode.com/files/unyaffs >~/bin/unyaffs

chmod a+x ~/bin/unyaffs

We put the bin folder in our path by adding the following line to the .bashrc:

export PATH=${PATH}:~/bin:~/android-sdk-linux_86/tools

Getting the source and proprietary apps

Next step is to download the source code using repo and git. However, first we will create a folder for our source tree and then we can check out the donut sources:

mkdir mydroid

cd mydroid

repo init -u git://android.git.kernel.org/platform/manifest.git -b donut-plus-aosp

repo sync

This will take a while, time to buy a six-pack (needed when we’ll compile the system). When the check out finished (and you’re not to drunken) we can proceed.

To grab some proprietary binaries from your device which can’t be distributed due to legal reasons (whatsoever). Download the “HTC Proprietary Binaries for ADP1” from HTC’s developer site, add it to your source tree in vendor/htc/dream-open/ and decompress it. Afterwards you have to connect your Android 1.6 equipped G1 to your computer and make the device available to the virtual machine. Then execute the file from the vendor/htc/dream-open/ directory.

We also need the Android 1.6 recovery image which was available from http://developer.htc.com/adp.html a while ago. Unfortunately the links are dead now (but the files are still there…). At the time of writing you could get it by typing at the root of your source tree:

wget --referer="http://developer.htc.com" http://member.america.htc.com/download/RomCode/ADP/signed-dream_devphone_userdebug-ota-14721.zip

From the vendor/htc/dream-open/ directory run the “unzip-files.sh” script to unzip some proprietary files for your device.

Since we need some Google Applications which are not open source (e.g. the Market, Google Maps, …) we will extract them from the system image which we can download using:

wget --referer="http://developer.htc.com" http://member.america.htc.com/download/RomCode/ADP/signed-dream_devphone_userdebug-img-14721.zip

Inside your home directory create the folder htc and extract the zip file to this folder. Afterwards we can extract the system.img using unyaffs:

cd ~/htc

unyaffs system.img

To copy the apps to your source tree execute the attached copy_google_apps.sh script. In addition, you have to edit the build script to include these apps by replacing ~/mydroid/vendor/htc/dream-open/htc_dream.mk by an extended device_dream.mk.

Now execute the envsetup.sh script from the root of your source tree and run “lunch aosp_dream_us-eng” to specifically configure the build system for the G1/Dream.

. build/envsetup.sh

lunch aosp_dream_us-eng

The output should look like this:

============================================

PLATFORM_VERSION_CODENAME=REL

PLATFORM_VERSION=1.6

TARGET_PRODUCT=aosp_dream_us

TARGET_BUILD_VARIANT=eng

TARGET_SIMULATOR=false

TARGET_BUILD_TYPE=release

TARGET_ARCH=arm

HOST_ARCH=x86

HOST_OS=linux

HOST_BUILD_TYPE=release

BUILD_ID=Donut

============================================

Compile and deploy

Finally grab the beer go to the source of your build tree and type

make

If everything went well you find the result in the out/target/product/dream-open directory. If you want to deploy it on your G1 you have to boot into fastboot mode (shut down the device and power it up again while holding the BACK key). The fastboot tool is part of the Android SDK but can also be downloaded from HTC. I copied the files to Windows but you could probably also flash them directly from Ubuntu:

fastboot flash boot boot.img

fastboot flash system system.img

fastboot flash recovery recovery.img

fastboot flash userdata userdata.img

fastboot reboot

The first start will take some time but you can follow the process with adb:

adb logcat

You should end up with a system that hopefully looks and behaves just like a vanilla Donut release. Time to change the source and do some serious stuff.

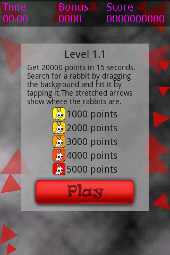

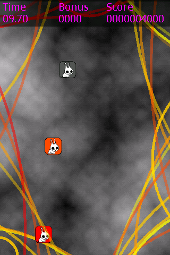

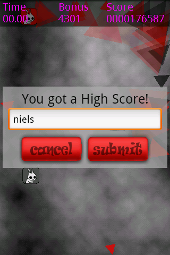

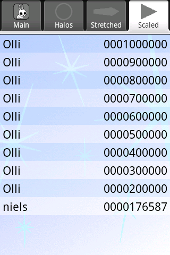

Hit the Rabbit!

Hit the Rabbit!