If you want to process camera images on Android phones for real-time object recognition or content based Augmented Reality you probably heard about the Camera Preview Callback memory Issue. Each time your Java application gets a preview image from the system a new chunk of memory is allocated. When this memory chunk gets freed again by the Garbage Collector the system freezes for 100ms-200ms. This is especially bad if the system is under heavy load (I do object recognition on a phone – hooray it eats as much CPU power as possible). If you browse through Android’s 1.6 source code you realize that this is only because the wrapper (that protects us from the native stuff) allocates a new byte array each time a new frame is available. Build-in native code can, of course, avoid this issue.

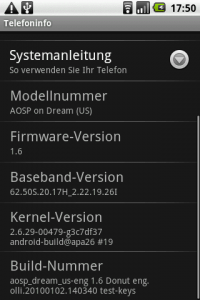

I still hope someone will fix the Camera Preview Callback memory Issue but meanwhile I fixed it, at least for my phone, to build prototypes by patching the Donut’s (Android 1.6) source code. What you find below is just an ugly hack I did for myself! To reproduce it you should know how to compile Android from source.

Avoid memory allocation

Diving in the source code starts with the Java Wrapper of the Camera and its native counterpart android_hardware_Camera.cpp. A Java application calls setPreviewCallback, this method calls the native function android_hardware_Camera_setHasPreviewCallback, and the call is passed further down into the system. When the driver delivers a new frame towards the native wrapper in return it ends up in the function JNICameraContext::copyAndPost():

void JNICameraContext::copyAndPost(JNIEnv* env, const sp& dataPtr, int msgType)

{

jbyteArray obj = NULL;

// allocate Java byte array and copy data

if (dataPtr != NULL) {

ssize_t offset;

size_t size;

sp heap = dataPtr->getMemory(&offset, &size);

LOGV("postData: off=%d, size=%d", offset, size);

uint8_t *heapBase = (uint8_t*)heap->base();

if (heapBase != NULL) {

const jbyte* data = reinterpret_cast(heapBase + offset);

obj = env->NewByteArray(size);

if (obj == NULL) {

LOGE("Couldn't allocate byte array for JPEG data");

env->ExceptionClear();

} else {

env->SetByteArrayRegion(obj, 0, size, data);

}

} else {

LOGE("image heap is NULL");

}

}

// post image data to Java

env->CallStaticVoidMethod(mCameraJClass, fields.post_event,

mCameraJObjectWeak, msgType, 0, 0, obj);

if (obj) {

env->DeleteLocalRef(obj);

}

}

The evil bouncer is the line obj = env->NewByteArray(size); which allocates a new Java byte array each time. For a frame with 480×320 pixels that means 230kb per call and that takes some time. Even worse this buffer must be freed later on by the Garbage Collector which takes even more time. Thus, the task is to avoid these allocations. I don’t care about compatibility with existing applications and want to keep the changes minimal. What I did is just a dirty hack but works for me quite well.

My approach is to allocate a Java byte array once and reuse it for every frame. First I added the following three variables to android_hardware_Camera.cpp:

static Mutex sPostDataLock; // A mutex that synchronizes calls to sCameraPreviewArrayGlobal

static jbyteArray sCameraPreviewArrayGlobal; // Buffer that is reused

static size_t sCameraPreviewArraySize=0; // Size of the buffer (or 0 if the buffer is not yet used)

To actually use the buffer I change the function copyAndPost by replacing it with the following code:

void JNICameraContext::copyAndPost(JNIEnv* env, const sp& dataPtr, int msgType) {

if (dataPtr != NULL) {

ssize_t offset;

size_t size;

sp heap = dataPtr->getMemory(&offset, &size);

LOGV("postData: off=%d, size=%d", offset, size);

uint8_t *heapBase = (uint8_t*)heap->base();

if (heapBase != NULL) {

const jbyte* data = reinterpret_cast(heapBase + offset);

//HACK

if ((sCameraPreviewArraySize==0) || (sCameraPreviewArraySize!=size)) {

if (sCameraPreviewArraySize!=0) env->DeleteGlobalRef(sCameraPreviewArrayGlobal);

sCameraPreviewArraySize=size;

jbyteArray mCameraPreviewArray = env->NewByteArray(size);

sCameraPreviewArrayGlobal=(jbyteArray)env->NewGlobalRef(mCameraPreviewArray);

env->DeleteLocalRef(mCameraPreviewArray);

}

if (sCameraPreviewArrayGlobal == NULL) {

LOGE("Couldn't allocate byte array for JPEG data");

env->ExceptionClear();

} else {

env->SetByteArrayRegion(sCameraPreviewArrayGlobal, 0, size, data);

}

} else {

LOGE("image heap is NULL");

}

}

// post image data to Java

env->CallStaticVoidMethod(mCameraJClass, fields.post_event, mCameraJObjectWeak, msgType, 0, 0, sCameraPreviewArrayGlobal);

}

If the buffer has the wrong size a new buffer is allocated. Otherwise the buffer is just reused. This hack has definitely some nasty side effects in common situations. However, to be nice we should delete the global refference to our buffer when the camera is released. Therefore, I add the following code to the end of android_hardware_Camera_release:

if (sCameraPreviewArraySize!=0) {

Mutex::Autolock _l(sPostDataLock);

env->DeleteGlobalRef(sCameraPreviewArrayGlobal);

sCameraPreviewArraySize=0;

}

Finally, I have to change the mutex used in the function postData. The Java patch below avoids passing the camera image to another thread. Therefore, the thread that calls postData is the same thread that calls my Java code. To be able to call camera functions from that Java code I need another mutex for postData. Usually the mutex mLock is used through the line: Mutex::Autolock _l(mLock); and I replace this line with Mutex::Autolock _l(sPostDataLock);.

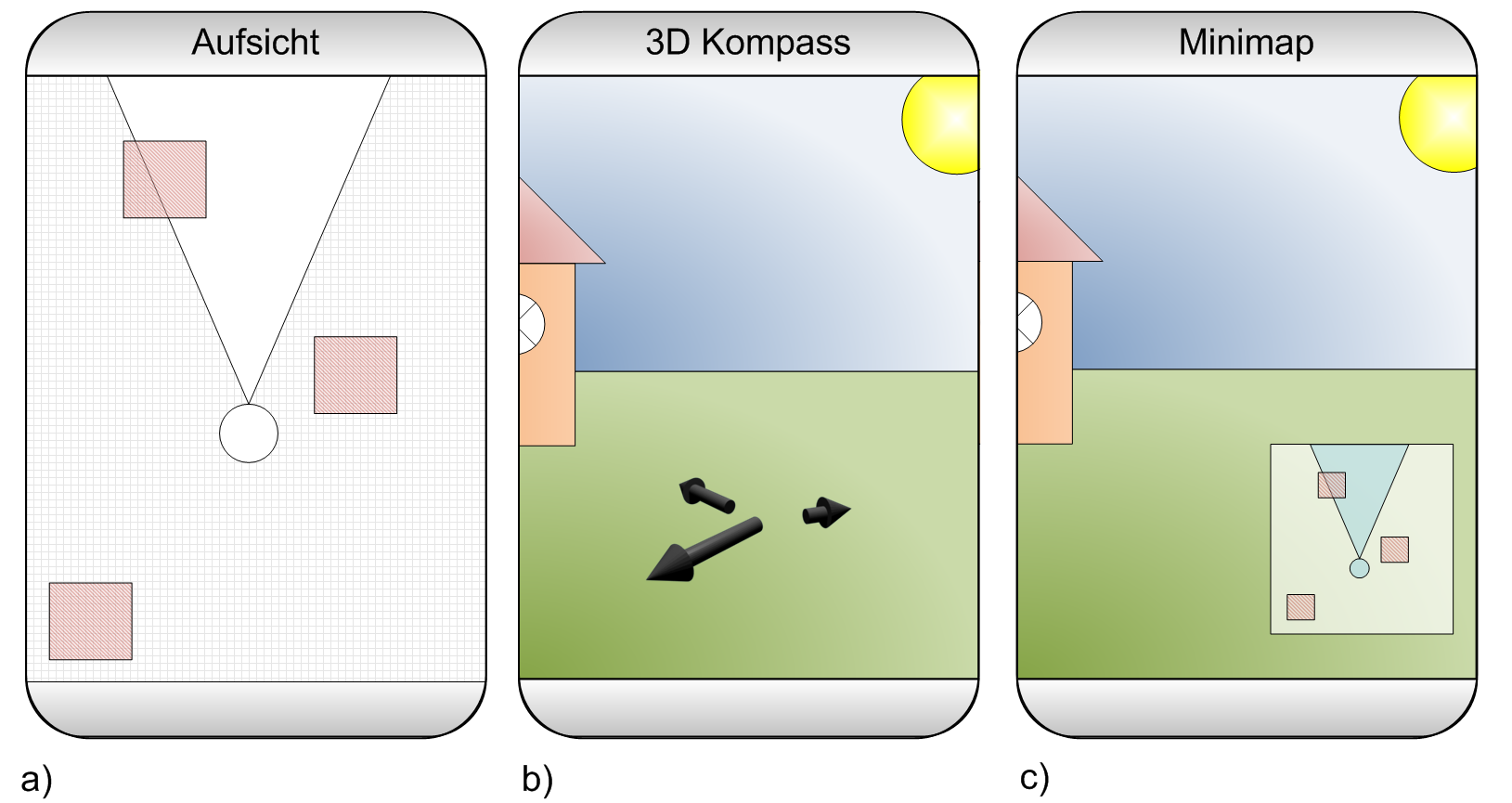

Outsmart Android’s message queue

Unfortunately this is only the first half our customization. Somewhere deep inside the system probably at the driver level (been there once – don’t want to go there again) is a thread which pumps the camera images into the system. This call ends up in the Java code of Camera.java. Thereby the frame is delivered to the postEventFromNative method inside Camera.java. However, afterwards the frame is not delivered directly to our application but takes a detour via Android’s message queue. This is pretty ugly if we reuse our frame buffer. The detour makes the process asynchronous. Since the buffer is permanently overwritten this leads to corrupted frames. If you want to avoid this detour this must be changed. The easiest solution (for me) is to take the code snippet that handles this callback from the method handleMessage:

case CAMERA_MSG_PREVIEW_FRAME:

if (mPreviewCallback != null) {

mPreviewCallback.onPreviewFrame((byte[])msg.obj, mCamera);

if (mOneShot) {

mPreviewCallback = null;

}

}

return;

and move it to the method postEventFromNative.

if (what==CAMERA_MSG_PREVIEW_FRAME) {

if (c.mPreviewCallback != null) {

c.mPreviewCallback.onPreviewFrame((byte[])obj, c);

if (c.mOneShot) {

c.mPreviewCallback = null;

}

}

return;

}

This might have some nasty side effects in some not so specific situations. If you done all that you might want to join the discussion about Issue 2794 and propose an API change in the Camera API: Excessive GC caused by preview callbacks thread to find a proper solution for the Camera Preview Callback memory Issue (and leave a comment here if you have a better solution).